IMEDNets: Image-to-Motion Encoder-Decoder Networks

Image-to-Motion Encoder-Decoder Networks

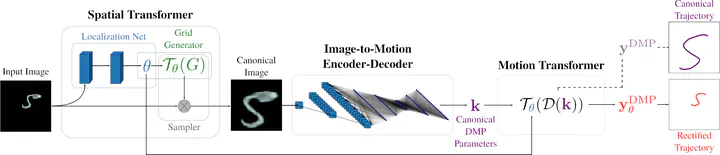

This strand of research focuses on developing deep neural network architectures to enable robots to learn visuomotor skills, specifically translating visual inputs into robot motion trajectories. Early contributions involved using convolutional encoder-decoder networks to robustly map digit images into dynamic movement primitives (DMPs) for robot handwriting tasks and improving generalization by incorporating spatial transformer modules to handle arbitrarily transformed inputs. Some of the core research work introduced a specialized trajectory-level loss function to enhance training effectiveness by directly optimizing trajectory similarity rather than abstract parameters, leading to better-quality learned motions. Recent advances have further evolved the approach by employing recurrent neural architectures capable of predicting complex interactions, such as human–robot or robot–robot handover tasks, using simulation-based training data augmentation to achieve strong real-world performance even with minimal calibration. This body of work collectively advances the capability of robots to flexibly and reliably translate visual information into precise motor actions in diverse environments.

Roles

Aug 1 2019 - Oct 31 2019: Visiting Researcher @ ATR & Nov 1 2019 - Feb 7 2020: Senior Assistant | Postdoc. @ JSI

- Extended prior work on image-to-motion encoder-decoder deep neural network architectures to handle sequential data in human-robot interaction scenarios, work that ultimately led to the development of the RIMEDNet (recurrent image-to-motion encoder-decoder network) model for use in handover prediction tasks.

May 1 2018 - Apr 30 2019: Guest Researcher | Postdoc. @ ATR

- Designed novel STIMEDNet (spatial transformer image-to-motion encoder-decoder network) architecture allowing a robot to learn hand-writing trajectories from images of digits in different poses.

- Extended prior IMEDNet (image-to-motion encoder-decoder network) architecture to use convolutional input layers, demonstrating improved performance with the novel CIMEDNet (convolutional image-to-motion encoder-decoder network) model.

Awards

Videos

IEEE TNNLS 2024: Simulation-aided handover prediction from video using recurrent image-to-motion networks

Video: Matija Mavsar’s YouTube Channel. Credit: Matija Mavsar, Jožef Stefan Institute.

ICRA 2019: Learning to Write Anywhere with Spatial Transformer Image-to-Motion Encoder-Decoder Networks

Video: Barry Ridge’s YouTube Channel. Credit: Barry Ridge, Rok Pahič, Jožef Stefan Institute.